[pac_divi_table_of_contents included_headings="on|on|on|off|off|off" minimum_number_of_headings="6" scroll_speed="8500ms" level_markers_1="decimal" level_markers_3="none" title_container_bg_color="#004274" _builder_version="4.22.2" _module_preset="default"...

Accelerate Data Workflows: Optimize Omnichannel sales with Delta Cache and Skipping

Databricks’ Delta Cache and Data Skipping are powerful features that can enhance the performance of data operations, especially for use cases like omnichannel sales operations, where large amounts of transactional and analytical data need to be processed efficiently.

Use Case: Omnichannel Sales Operations

Omnichannel sales involve integrating data from various channels (e.g., online stores, physical stores, mobile apps, and customer support) to provide a seamless customer experience. This requires real-time or near-real-time data processing to:

- Track inventory across channels.

- Optimize pricing strategies.

- Personalize customer experiences.

- Analyze sales performance across channels.

Challenges in Omnichannel Sales Data:

- Huge data volume (sales transactions, inventory updates, customer interactions).

- Query performance bottlenecks due to complex joins and aggregations.

- Need for quick access to frequently queried data.

How Delta Cache and Data Skipping Help

- Delta Cache

What it is: Delta Cache automatically caches the most frequently accessed data in memory on the worker nodes of your Databricks cluster.

Benefits:

-

- Speeds up repetitive queries by avoiding disk I/O.

- Reduces cluster resource consumption.

- Ideal for frequently queried data like customer purchase histories or inventory levels.

- Data Skipping

What it is: Data Skipping reduces the amount of data scanned by leveraging metadata to skip irrelevant data during query execution.

Benefits:

-

- Optimizes query performance by scanning only the necessary data blocks.

- Particularly useful for large tables with partitioned data (e.g., sales data partitioned by date or region).

- Enhances analytical queries like sales trend analysis for a specific time range or product category.

Need an expert to implement these solutions? Hire a developer today and optimise your data workflows!

Implementation for Omnichannel Sales Operations

Example Use Case: Sales Trend Analysis

Analyze sales trends for a specific product category across multiple regions and time periods.

Data Structure:

- Table: sales_data

- Partitions: region, category, date

Code Example with Delta Cache and Data Skipping

from pyspark.sql import SparkSession

# Initialize Spark session

spark = SparkSession.builder.appName(“Delta Cache Example”).getOrCreate()

# Load Delta table

sales_data = spark.read.format(“delta”).load(“/mnt/sales_data”)

# Enable Delta Cache for the table

sales_data.cache() # This caches the data in memory for faster access

# Example query: Analyze sales trends for a specific product category

product_category = “Electronics”

sales_trends = sales_data.filter(

(sales_data[“category”] == product_category) &

(sales_data[“date”] >= “2024-01-01”) &

(sales_data[“date”] <= “2024-06-30”)

).groupBy(“region”).sum(“sales_amount”)

sales_trends.show()

Optimizing with Data Skipping

To optimize for Data Skipping, ensure the data is partitioned correctly.

# Writing data with partitions for skipping

sales_data.write.format(“delta”).mode(“overwrite”).partitionBy(“region”, “category”, “date”).save(“/mnt/sales_data_partitioned”)

# Query the partitioned data

partitioned_data = spark.read.format(“delta”).load(“/mnt/sales_data_partitioned”)

# Skipping irrelevant partitions automatically

regional_sales = partitioned_data.filter(

(partitioned_data[“region”] == “North America”) &

(partitioned_data[“category”] == “Electronics”)

).select(“date”, “sales_amount”)

regional_sales.show()

Important Tips

- Partition Strategically:

- Use relevant dimensions like region, category, or date to minimize the data scanned during queries.

- Enable Auto-Optimize:

- Use Delta Lake’s Auto Optimize to maintain efficient file layouts and indexing.

- SET spark.databricks.delta.optimizeWrite.enabled = true;

- SET spark.databricks.delta.autoCompact.enabled = true;

- Monitor and Tune Cache:

- Use Databricks monitoring tools to ensure the Delta Cache is used effectively. Cache frequently queried data only.

- Leverage Z-Order Clustering:

- For queries that involve multiple columns, Z-Order clustering can further improve Data Skipping performance.

- sales_data.write.format(“delta”).option(“zorder”, “region, date”).save(“/mnt/sales_data”)

Benefits in Omnichannel Sales Operations

- Faster Queries: Reduced latency for reports and dashboards.

- Cost Efficiency: Optimized cluster resource usage.

- Scalability: Handles growing data volumes with efficient partitioning and caching.

By combining Delta Cache and Data Skipping with best practices, you can achieve real-time insights and a seamless omnichannel sales strategy.

Achieving the similar functionality in Snowflake provides similar functionalities to Databricks’ Delta Cache and Data Skipping, although implemented differently. Here’s how these functionalities map to Snowflake and a comparison:

Snowflake Functionalities

- Caching Mechanism in Snowflake:

- Snowflake automatically caches query results and table metadata in its Result Cache and Metadata Cache.

- While not identical to Databricks’ Delta Cache, Snowflake’s Result Cache accelerates queries by serving previously executed results without re-execution, provided the underlying data has not changed.

- Data Skipping in Snowflake:

- Snowflake uses Micro-Partition Pruning, an efficient mechanism to skip scanning unnecessary micro-partitions based on query predicates.

- This is conceptually similar to Data Skipping in Databricks, leveraging metadata to read only the required micro-partitions for a query.

Comparison: Delta Cache vs. Snowflake Caching

| Feature | Databricks (Delta Cache) | Snowflake (Result/Metadata Cache) |

| Scope | Caches data blocks on worker nodes for active jobs. | Caches query results and metadata at the compute and storage layer. |

| Use Case | Accelerates repeated queries on frequently accessed datasets. | Reuses results of previously executed queries (immutable datasets). |

| Cluster Dependency | Specific to cluster; invalidated when cluster is restarted. | Independent of clusters; cache persists until the underlying data changes. |

| Control | Manually enabled with .cache() or Spark UI. | Fully automated; no user intervention required. |

Comparison: Data Skipping vs. Micro-Partition Pruning

| Feature | Databricks (Data Skipping) | Snowflake (Micro-Partition Pruning) |

| Granularity | Operates at the file/block level based on Delta Lake metadata. | Operates at the micro-partition level (small chunks of columnar data). |

| Partitioning | Requires explicit partitioning (e.g., by date, region). | Automatically partitions data into micro-partitions; no manual setup needed. |

| Optimization | Users must manage partitioning and file compaction. | Fully automatic pruning based on query predicates. |

| Performance Impact | Depends on user-defined partitioning strategy. | Consistently fast with Snowflake’s automatic optimizations. |

How Snowflake Achieves This for Omnichannel Sales Operations

Scenario: Sales Trend Analysis

Data Structure:

- Table: SALES_DATA

- Micro-partitioning: Automatically handled by Snowflake.

Code Example in Snowflake

- Querying Data with Micro-Partition Pruning:

- Snowflake automatically prunes irrelevant data using query predicates.

— Query sales trends for a specific category and time range

SELECT REGION, SUM(SALES_AMOUNT) AS TOTAL_SALES

FROM SALES_DATA

WHERE CATEGORY = ‘Electronics’

AND SALE_DATE BETWEEN ‘2024-01-01’ AND ‘2024-06-30’

GROUP BY REGION;

- Performance Features:

- Micro-Partition Pruning ensures that only relevant partitions are scanned.

- Result Cache stores the output of the above query for future identical queries.

Optimization Tips in Snowflake

- Clustering:

- Use Cluster Keys to optimize data for frequently used columns like CATEGORY and SALE_DATE.

- ALTER TABLE SALES_DATA CLUSTER BY (CATEGORY, SALE_DATE);

- Materialized Views:

- Create materialized views for frequently accessed aggregations.

- CREATE MATERIALIZED VIEW SALES_TRENDS AS

- SELECT REGION, SUM(SALES_AMOUNT) AS TOTAL_SALES

- FROM SALES_DATA

- GROUP BY REGION;

- Query History:

- Use Snowflake’s Query Profile to analyze performance and identify bottlenecks.

Key Differences for Omnichannel Sales Operations

|

Aspect |

Databricks |

Snowflake |

|

Setup Complexity |

Requires manual partitioning and caching. |

Fully automated; minimal user intervention. |

|

Real-Time Performance |

Faster for frequently queried data when cached. |

Fast out-of-the-box with automatic caching and pruning. |

|

Scalability |

Scales with Spark clusters. |

Scales seamlessly with Snowflake’s architecture. |

|

Use Case Suitability |

Better for iterative big data processing. |

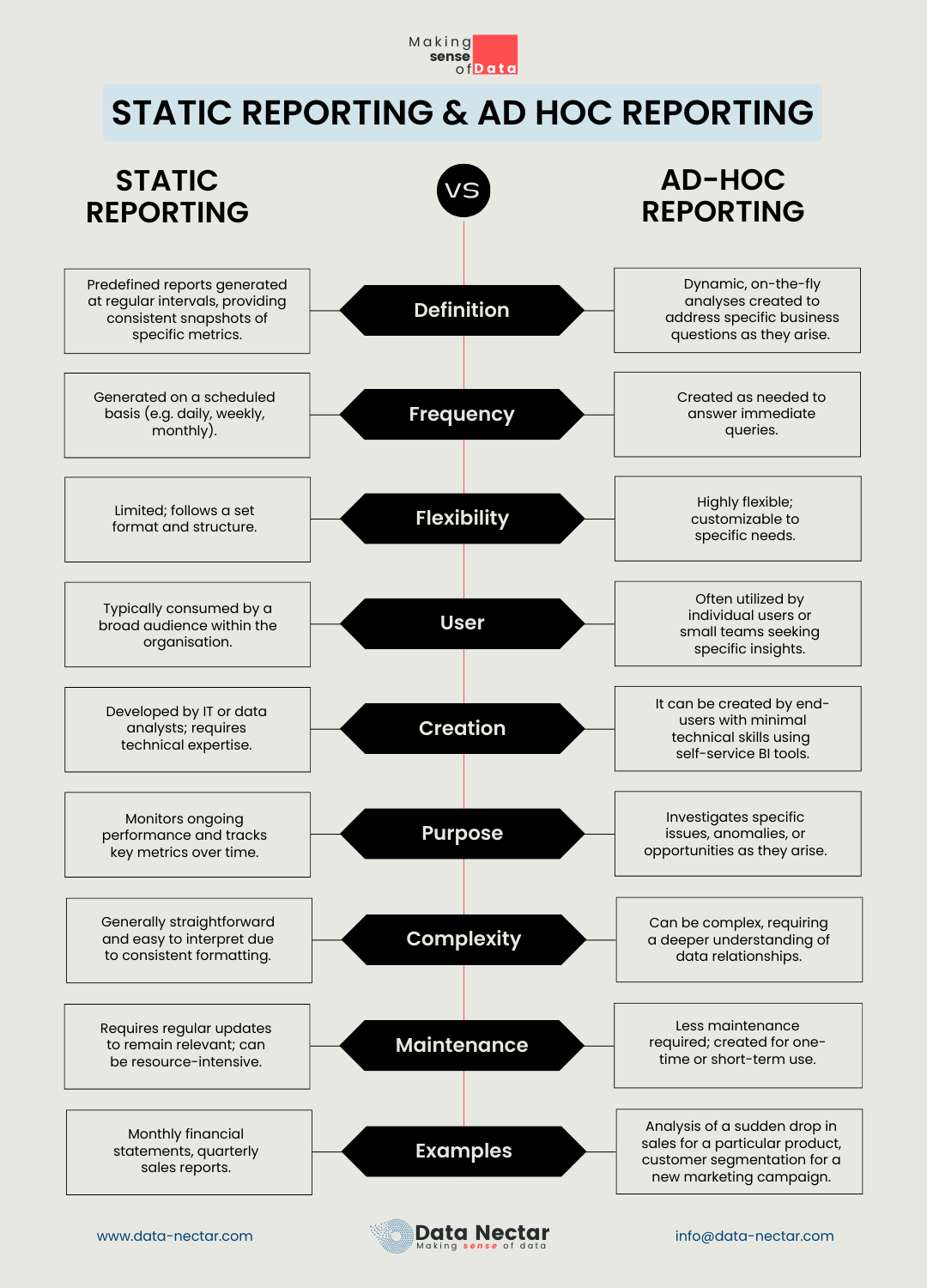

Better for ad-hoc analytics and structured queries. |

Conclusion

- Choose Databricks if your omnichannel sales operations require complex transformations, real-time streaming, or iterative data processing.

- Choose Snowflake if you prioritize ease of use, ad-hoc query performance, and automated optimizations for structured analytics.

Both platforms are powerful; the choice depends on your operational needs and the complexity of your data workflows.

Looking to bring these strategies to life? Hire a skilled developer to integrate Delta Cache and Data Skipping into your operations.

Recent Post

Accelerate Data Workflows: Optimize Omnichannel sales with Delta Cache and Skipping

What is Ad Hoc Analysis and Reporting?

[pac_divi_table_of_contents included_headings="on|on|on|off|off|off" minimum_number_of_headings="6" scroll_speed="8500ms" level_markers_1="decimal" level_markers_3="none" title_container_bg_color="#004274" _builder_version="4.22.2" _module_preset="default"...

Top Benefits of Data Governance for Your Organization

[pac_divi_table_of_contents included_headings="on|on|on|off|off|off" minimum_number_of_headings="6" scroll_speed="8500ms" level_markers_1="decimal" level_markers_3="none" title_container_bg_color="#004274" admin_label="Table Of Contents Maker"...